#IJCAI2025 distinguished paper: Combining MORL with restraining bolts to learn normative behaviour - Robohub

Source: robohub

Published: 9/4/2025

To read the full content, please visit the original article.

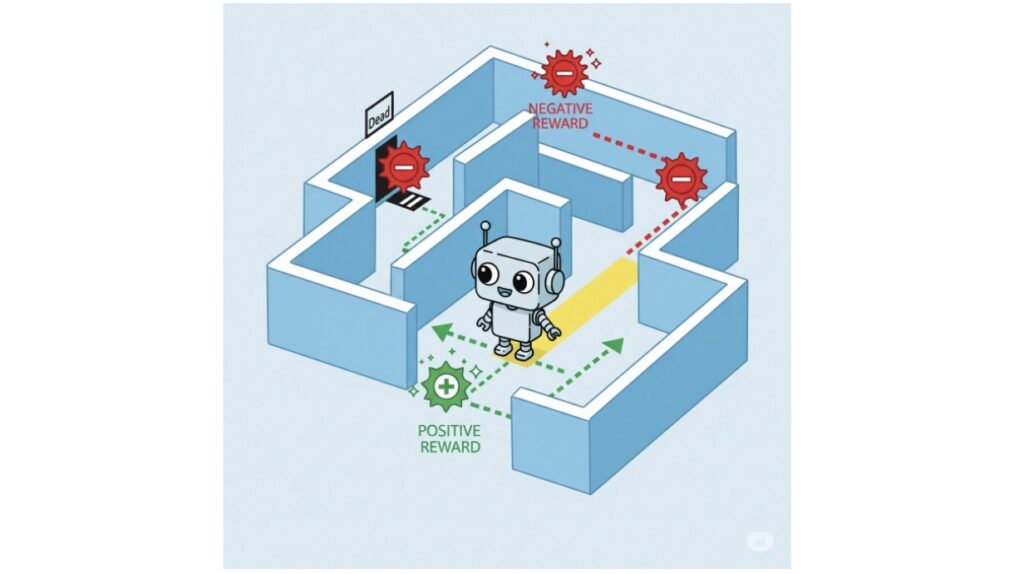

Read original articleThe article discusses advancements presented at IJCAI 2025 concerning the integration of Multi-Objective Reinforcement Learning (MORL) with restraining bolts to enable AI agents to learn normative behavior. Autonomous agents, powered by reinforcement learning (RL), are increasingly deployed in real-world applications such as self-driving cars and smart urban planning. While RL agents excel at optimizing behavior to maximize rewards, unconstrained optimization can lead to actions that, although efficient, may be unsafe or socially inappropriate. To address safety, formal methods like linear temporal logic (LTL) have been used to impose constraints ensuring agents act within defined safety parameters.

However, safety constraints alone are insufficient when AI systems interact closely with humans, as normative behavior involves compliance with social, legal, and ethical norms that go beyond mere safety. Norms are expressed through deontic concepts—obligations, permissions, and prohibitions—that describe ideal or acceptable behavior rather than factual truths. This introduces complexity in reasoning, especially with contrary-to-duty

Tags

robotartificial-intelligencereinforcement-learningautonomous-agentssafe-AImachine-learningnormative-behavior